Bugster vs. AI Code Review Tools: The Real Browser Advantage

Why testing your app like a real user complements analyzing code for comprehensive bug detection

Why This Comparison Matters

In frontend development, not all bugs are created equal. AI Code Review tools analyze your diff and examine code semantics, while AI E2E Testing tools execute your app like real users do.

Think of it like this: one tool reads your recipe, the other actually cooks the dish. Both approaches are valuable, but they surface very different kinds of mistakes. Understanding this split helps you build a bug detection strategy that doesn’t leave blind spots.

What AI Code Review Tools Do (Greptile, BugBot, CodeRabbit)

AI Code Review tools plug directly into your PRs. They analyze code semantics, logic patterns, guards, and validations. Their strength? Catching deterministic bugs in your source code before it ever ships.

Take Greptile: it understands your entire codebase context and spots complex logic errors, security vulnerabilities, and architectural anti-patterns that human reviewers often miss.

What these tools excel at:

Logic and control flow errors

Security vulnerabilities (SQL injection, XSS in code)

Code quality and maintainability issues

Architectural anti-patterns

Type safety and null reference errors

Dead code and redundancy detection

In short: they’re like having a super-powered static analysis reviewer who never gets tired.

What AI E2E Testing Tools Do (Bugster)

AI E2E tools like Bugster go down a different path: instead of reading your recipe, they step into the kitchen. Bugster spins up real browsers, navigates your app, clicks the buttons, fills the forms, and validates what happens in the messy, real-world runtime.

Bugster’s destructive agent adds another layer: it looks at your PR changes and stress-tests those modified areas directly. That means your new features and fixes get hammered under realistic conditions before users ever touch them.

What Bugster excels at:

User interaction flows and state consistency

Visual layout and responsive design issues

Real browser runtime errors

End-to-end user flows with external services

Performance under real network conditions

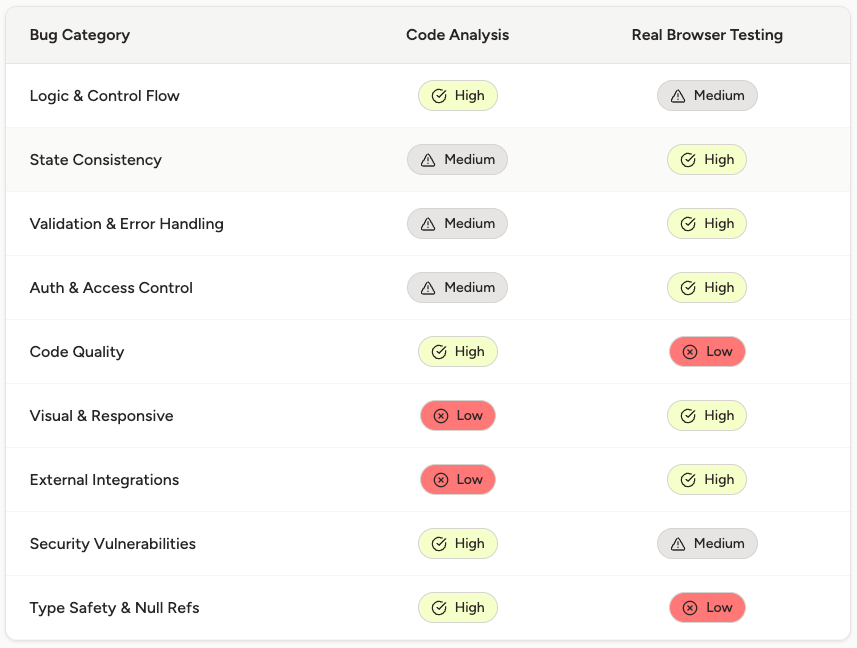

Bug Detection Matrix: What Each Tool Actually Catches

This is the core tradeoff: static tools safeguard your code logic; real-browser tools safeguard your user experience.

Illustrative Cases: When Each Tool Shines

Case 1: Authentication Token Expiration

The Code (passes all static analysis):

// This looks perfect to AI Code Review Tools

const AuthProvider = ({ children }) => {

const [token, setToken] = useState(localStorage.getItem('token'));

const refreshToken = async () => {

const response = await fetch('/api/refresh');

const newToken = await response.json();

setToken(newToken);

};

return (

<AuthContext.Provider value={{ token, refreshToken }}>

{children}

</AuthContext.Provider>

);

};

What AI Code Review Tools catches: Logic is sound, no security vulnerabilities in code

What Bugster catches:

User gets logged out mid-checkout because token expires

Refresh mechanism fails on slow connections

User loses form data when authentication redirects occur

Error messages don't appear properly when auth fails

Case 2: Calendar End-of-Month Edge Case

The Code (perfect logic according to static analysis):

// AI Code Review Tools sees no issues here

function getNextAvailableDate(currentDate) {

const next = new Date(currentDate);

next.setDate(next.getDate() + 1);

return next;

}

What AI Code Review Tools catches: The date logic is mathematically correct

What Bugster catches:

Calendar breaks when users select January 31st (next month has no 31st)

Date picker shows impossible dates in February

Timezone shifts cause booking conflicts

Mobile date picker has different behavior than desktop

Case 3: Third-Party Checkout Integration

The Code (passes all code review standards):

// AI Code Review Tools approves this implementation

const processPayment = async (paymentData) => {

try {

const result = await stripe.createPaymentIntent(paymentData);

return { success: true, paymentIntent: result };

} catch (error) {

return { success: false, error: error.message };

}

};

What AI Code Review Tools catches: Error handling is properly implemented, no obvious security issues

What Bugster catches:

Stripe modal doesn't load on slow 3G connections

Payment succeeds but confirmation page fails to load

Users can double-click and create duplicate charges

Payment form breaks when browser blocks third-party scripts

Success/failure states don't update UI properly

The Complementary Strategy: AI Code Review + Bugster

This isn’t about picking sides. The strongest teams layer both approaches.

1. Pre-Commit: AI Code Review

Catches logic errors, security vulnerabilities, and quality issues

Instant feedback in PR workflow

2. Pre-Deploy: AI E2E Testing (Bugster)

Validates user experience in real browsers

Exercises integrations, state, and UX edge cases

Ideal CI/CD Pipeline:

┌─────────────────┐

│ Code Review │ ← Greptile, BugBot catch logic

│ Static Analysis │ & security bugs in source code

└─────────────────┘

↓

┌─────────────────┐

│ Real Browser │ ← Bugster catches user experience

│ Testing │ bugs in actual environment

└─────────────────┘

↓

┌─────────────────┐

│ Production │ ← Maximum bug prevention

└─────────────────┘

Call to Action: Activate both layers: AI code reviewer on PRs + Bugster on preview environments for your 5 core user flows. This combination gives you comprehensive coverage from code quality to user experience.

Conclusion: Two Tools, One Mission

AI Code Review Tools keep your codebase logically sound and secure. Bugster ensures that sound code translates into a seamless user experience.

The future of frontend isn’t static analysis or real browser testing, it’s both. Together, they give you confidence from commit to production.

Ready to see what your code reviewers can’t? Try Bugster’s destructive testing on your next PR and find the UX bugs hiding in plain sight.